Generative AI as the new foundational service

An exposé of that narrative at AWS re:Invent

Hey, thanks for reading this. My name is Olivier Dupuis and I’ve been building data products for the past 10 years or so. I’ve worked within many organizations, at different stages of data maturity and participated in scaling data products through different types of struggles. I’ve just started the RepublicOfData.io consulting agency to help data product owners scale past their struggles.

Today, I want to talk about the constant tension between choosing stack components that are offered by cloud service providers - commoditized, stable and integrated with the rest of your ecosystem; vs adopting 3rd party-tools that are at the forefront of their space, constantly delivering value, but at the cost of managing their integration into the ecosystem. In this case, we’re talking about introducing Generative AI to your data stack.

Always appreciate a follow and a share. Merci!

Olivier

Conference season is coming to an end. Practitioners are flying back home, their backpacks full of vendor gizmos and their heads filled with hype and dreams (or delusions depending on the conference you attended). I could talk about dbt Coalesce, the Dagster Launch Week, or Hex 3.0, or OpenAI. Instead, my attention is turning to AWS re:Invent.

Why? Well, I do have a special place in my heart for AWS. Back when I was developing web apps in Ruby On Rails, AWS came out with their first cloud services and it just blew our collective minds. Instead of reserving a physical instance in a server room from a random (aka cheapest) service provider, we could use servers on demand, for a fraction of the price.

That was amazing!

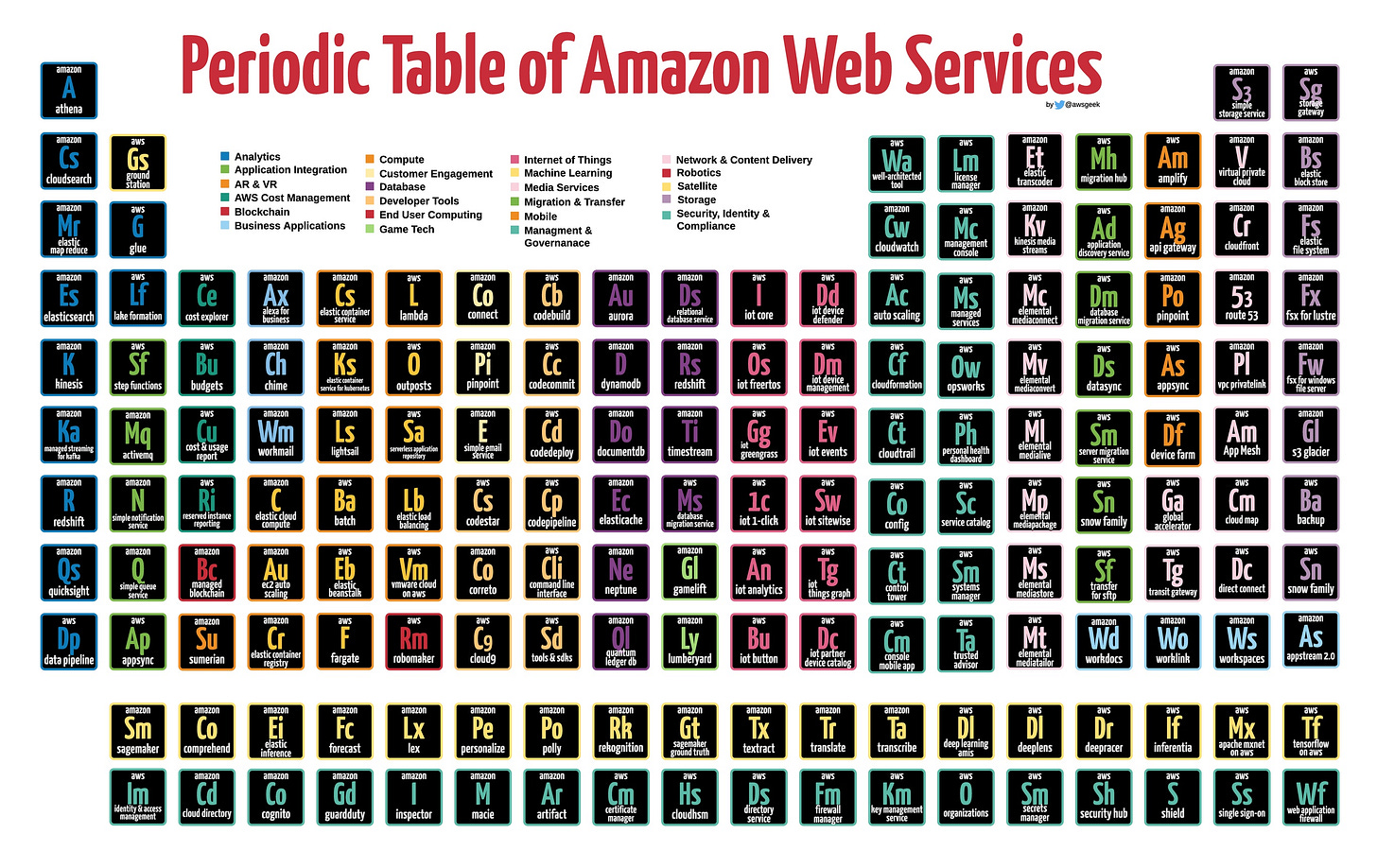

Since then, the service offering has exploded. There are other cloud providers, but AWS broke that ground and still is, arguably, leading it.

So what does all of that has to do with building data products? Well, glad you asked.

In my last post, I talked about thinking outside the MDS box. How that meant thinking as a product manager, instead of a software engineer. Now, let me put my engineering hat back on and let’s geek out on the actual cogs and bolts of how to turn bits into insights.

Building data products one layer at a time

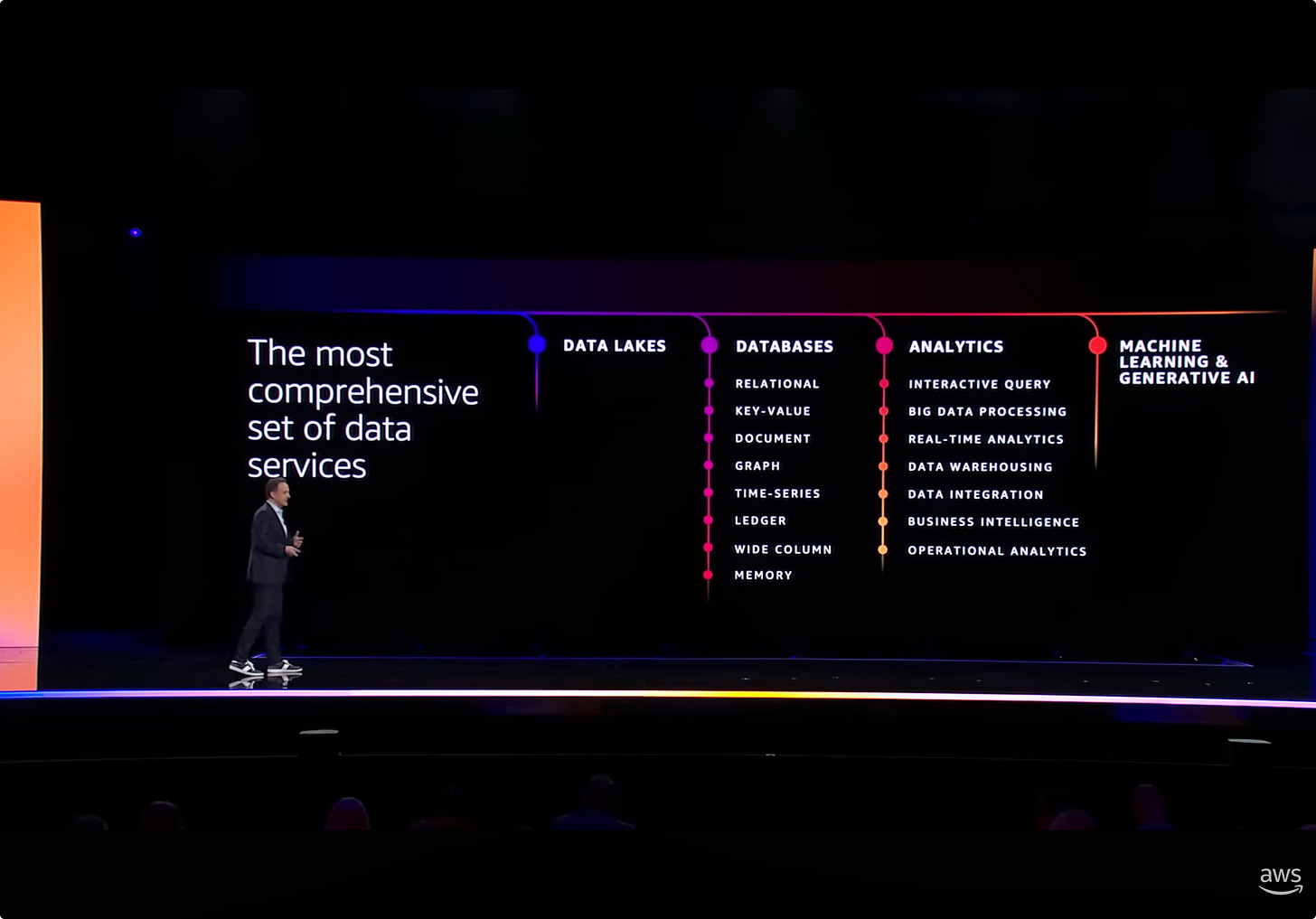

To build a data product, you need a foundational layer comprise of the following services:

- Storage, to hold those precious bits of data, in multiple formats and with varying modes of accessibility.

- Data transfer, to move your data within one storage unit to another.

- Compute, to perform all your data operations.

- Security, to limit access to your analytical assets.

Once those foundations are in place, you might start considering adding additional services around your core platform. Not all required, but useful.

- Data loading, to source your data from multiple SaaS applications or external data storages, and copy them to your cloud data warehouse.

- Cloud data warehouse, to store your data for optimal analytical functions.

- ETL, to perform data transformations within your cloud data warehouse.

- Semantics, to add domain-specific meaning to your analytical assets.

- API, to provide flexible access to your analytical assets.

- BI, to consume your analytical assets and give users a controled environment to query, visualization and monitor key metrics.

- ML, to extract additional attributes out of your data by performing statistical functions.

- Orchestration, to materialize your data assets in an optimal sequence to meet integrity, quality and freshness policies.

- Monitoring, to keep an eye on the operational performance of your data platform.

You might choose your cloud service provider to get those functionalities (and others as well), or you might also use external services that are specifically tailored to perform those services extraordinarly well.

We’re all familiar with those services. We’ve been building our data products with those components for years now. But shit has changed drastically in the past year. It’s now a Generative AI world.

Where does that fit in our stack and how are cloud providers such as AWS shaping the narrative for you to adopt them.

Generative AI - The next component of foundational layers

A year ago, ChatGpt was released to the world. We’ve all been messing around with it and trying to wrap our head around how this will change our world. There’s still a lot to figure out: how to use LLMs to enhance your data; how to transform natural language into well-structured queries of your analytical assets; how to build agents that automatically perform tasks on your behalf.

We’re still early in that discovery journey, but one thing that is becoming obvious, is that there are other providers of LLMs than just ChatGPT. And even though Gemini might outperform GPT-4 on some benchmarks, there are other questions that are to be taken into considerations

Beyond the performance of models, their value for data product building might reside in their integration with your whole data ecosystem.

The interesting bit from AWS was how they are recognizing their role as an integrated ecosystem to make your Generative AI experiments more data-aware than coming up with similar capabilities using another model provider and enhancing context yourself.

That leverages their foundational services and allows for a dedicated stack offering to have all the services you require to serve Generative AI capabilities within your data product.

Your data is your differentiator

The narrative that I heard being laid out during the AWS re:Invent keynote was that:

The power of Generative AI might be correlated to your choice of model. But its value comes from the model’s awereness of your context, your domain, your data.

Meaning that: your data is your differentiator.

You can either fine-tune a model, use a RAG (Retrieval Augmented Generation) approach or continued pre-training. Any of those 3 approaches is quite an involved task as you do need to put your data in relation with the model you’re using for a generative AI app.

Where your cloud provider is providing the core services for your data ecosystem (storage, transfer, compute and security), they are in great position to deliver that « data is your differentiator » promise. Wehereas model providers such as OpenAI and HuggingFace are giving you access to exceptional models, your cloud service provider is offering the differentiator that could make your data ecosystem and your data products exceptional.

That’s the promise of Amazon Bedrock - “the easiest way to build and scale generative AI applications with LLMs and other FMs". It is AWS’s new foundational service where your data is put in relationship with your selected model. Your generative AI experience then becomes context-aware of your data ecosystem.

Generative AI - Ready for commodity?

The advantage of having most of your ecosystem within a single cloud provider is that your data is secure within the same physical network. You benefit from maximum integration. But of course it goes a bit against the MDS philosophy of pick and choosing service providers for their modularity, interconnectedness and adherence to “do one thing and do it well”.

Maybe we should just recognize the hybrid nature of our data stacks anyways. We always need to choose a data cloud provider first and foremost. Then we decide on which services should be provided by the cloud provider or by a 3rd party service provider. How to balance the benefits of integration vs the benefits of innovative features from those new players.

The question you need to answer is:

Is genrative AI a foundational service to your data product? Or is it part of your secondary layer that benefits from being serviced by a specialized 3rd party provider?

Should you plug in ChatGPT and HuggingFace, stitching those into your data platforms to get access to the latest of the latest in those technologies? Or should you think of this as just another foundational service, such as storage, data transfer, compute and security, that should be deeply rooted in your data ecosystem for its benefits to hydrate throughout your data products.

I hope you enjoyed this post! Please reach out on LinkedIn if you want to chat about scaling beyond your current data product building challenges. We’re still putting together our service offering (and website) for RepublicOfData.io, but feel free to book some time with me if you want to discuss how our multi-disciplinary team can help you get unstuck within 6 weeks. Until next time 👋